Range-GAN

Range-Constrained Generative Adversarial Network for Conditioned Design Synthesis

1MIT 2Siemens Technology

Range-Constrained Generative Adversarial Network for Conditioned Design Synthesis

1MIT 2Siemens Technology

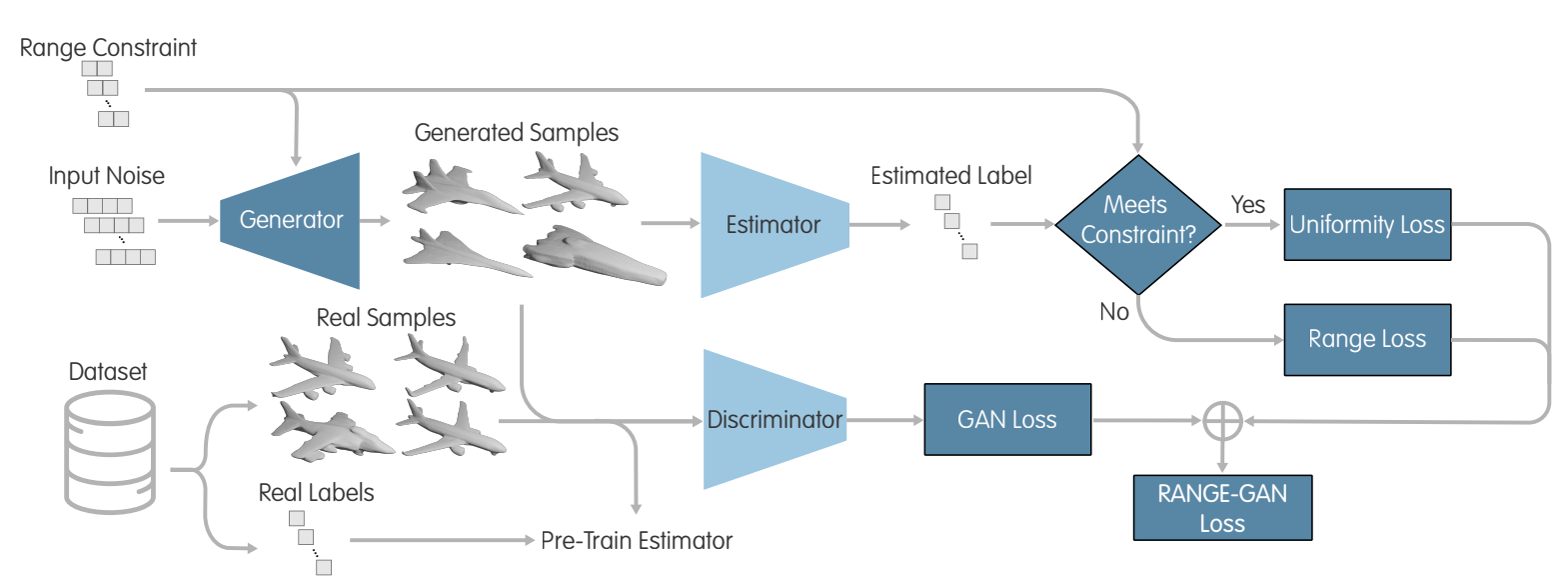

To enforce range constraints in GANs, we introduce our novel architecture as displayed below. In our approach, we condition the generator using an estimator rather than the typical approach in most conditional GANs (cGANs), where the discriminator is used to condition the generator.

To utilize the estimator as an approach for conditioning the generator, we introduce our range-loss, which uses the predicted performance of generated design (from the differentiable estimator) to guide the GAN toward generating a design that meets the input range constraint.

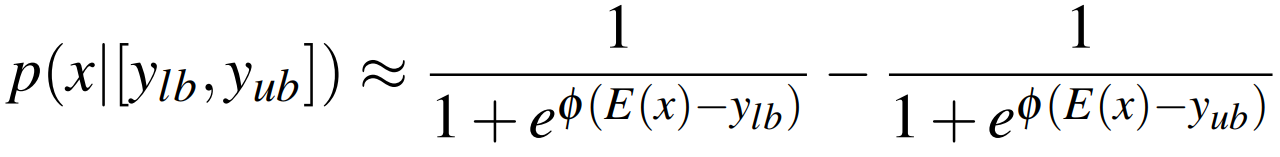

As mentioned before, the range loss is used to guide the GAN in meeting range constraints. To integrate the estimator into the GAN’s objective, we propose a novel loss function for the generator. This loss function must have certain characteristics to be effective — 1) the loss function must have a zero gradient for samples within the input condition range (i.e., samples that meet the condition need no further change), and 2) the gradient should start gradually decreasing as samples get closer to the acceptable range to stabilize training. Given that these characteristics are seen in the negative log-likelihood (NLL) function of the GANobjective, we attempt to create a similar loss function that applies the same principles for the range conditions. To imitate the NLL, we need a mechanism that turns predicted continuous la-bels into probabilities of condition satisfaction. To do this, we use two sigmoid functions shifted to the lower and upper bounds of the given range condition to estimate the probability of condition satisfaction.

Then we set the negative likelihood calculated using this approximate probability as the loss term for Range-GAN.

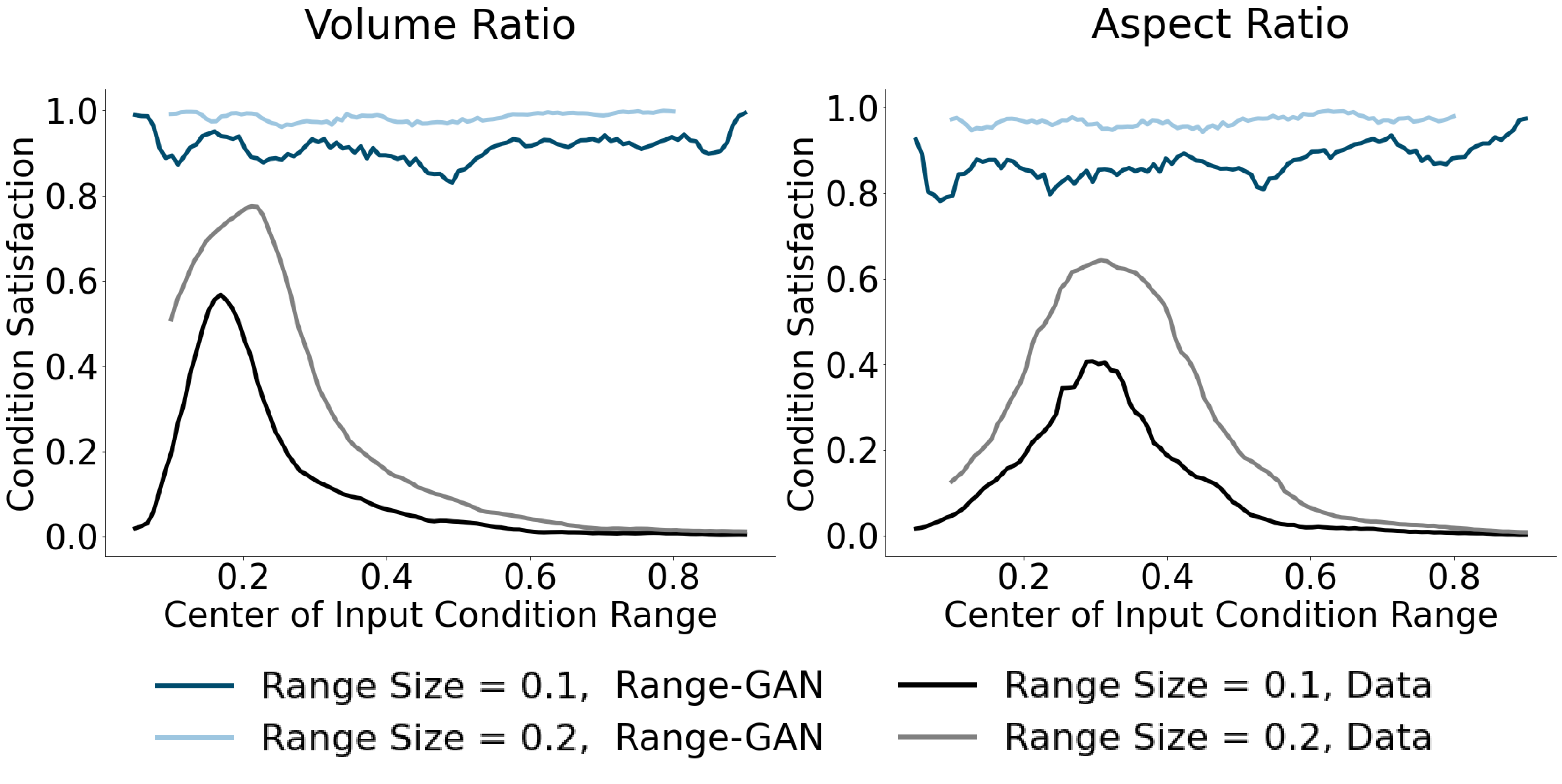

Using this range-loss, we can effectively generate samples within desired range constraints as shown in the input constraint satisfaction.

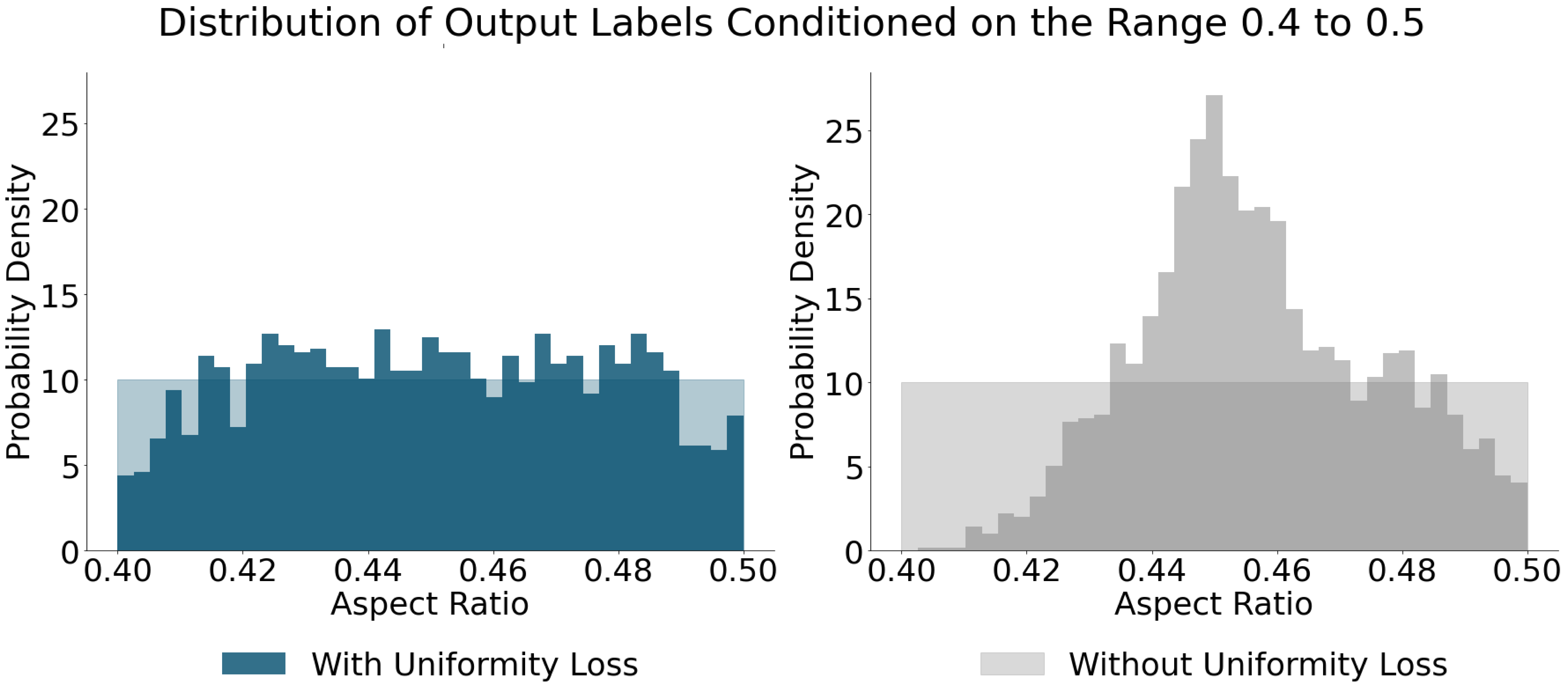

To explore the design space effectively, we must generate designs that cover the acceptable range as uniformly as possible, as this would cover the largest subset of the design space and provide the most diverse set of designs necessary for effective design space exploration. To do this, we apply our uniformity loss, which is detailed in the paper. By doing this, we can move Range-GAN from uneven distributions of generated samples to a more even distribution of samples in the label space. Below is an image showing an example of how this loss term improves the distribution in the samples.

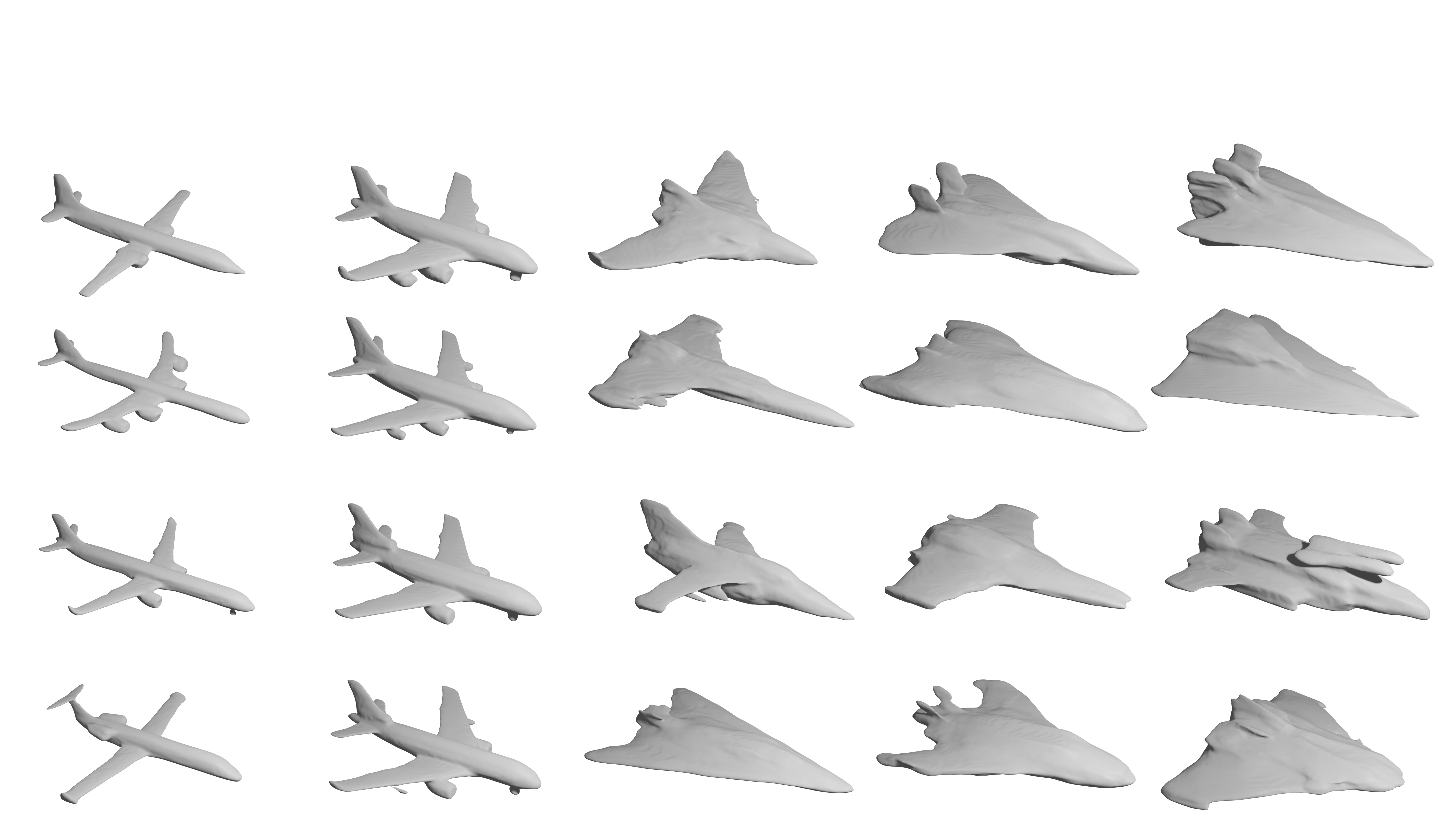

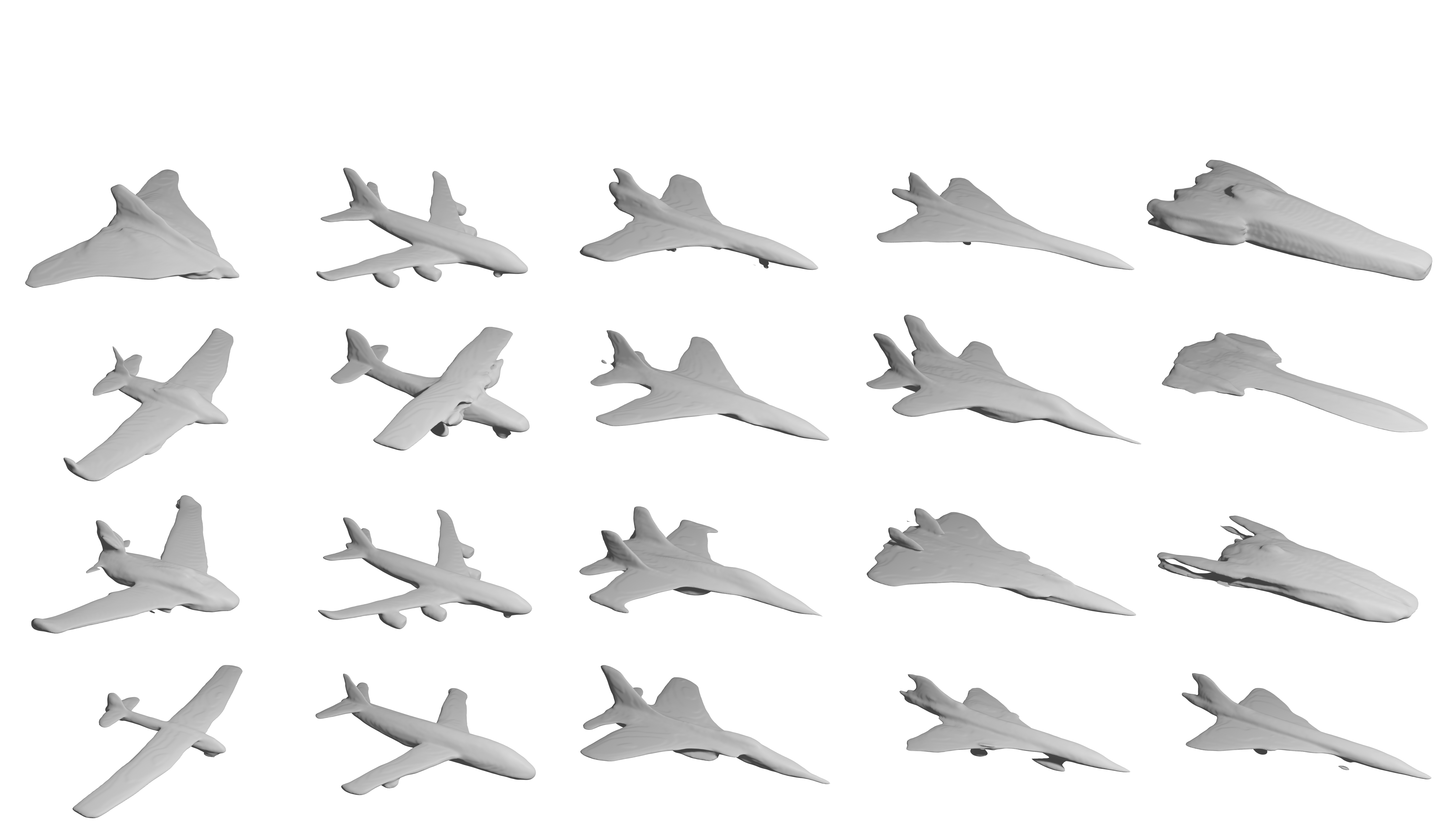

In our study, we explore the case study of generating 3D airplane models conditioned on aspect ratio and volume. The quantitative results were presented before, but here we present our results visually.

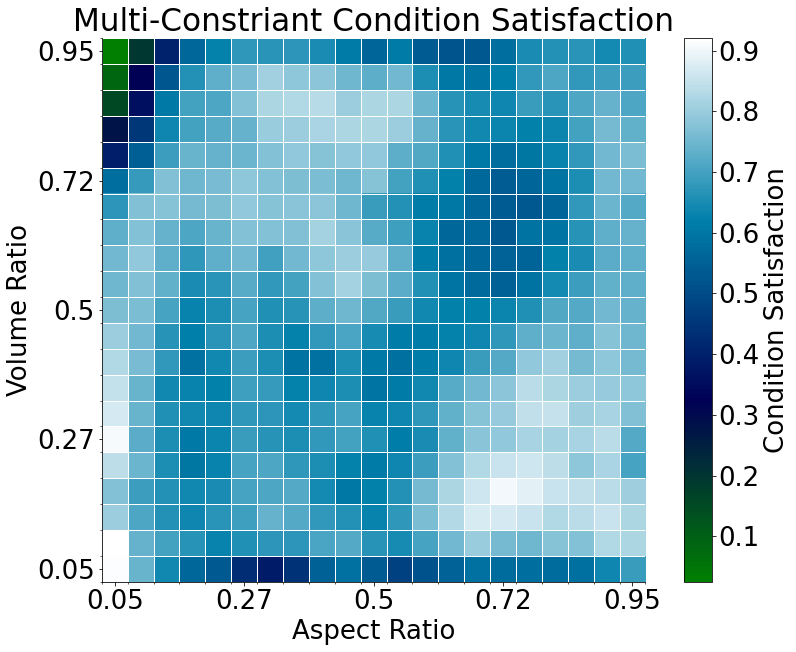

The approach presented in Range-GAN can be applied two as many objectives as needed. This is because uniformity and range loss apply to each objective and can be summed (or weighted sum) to form the multi-objective loss. Below we showcase the results of a multi-objective case study.

Nobari, A. H., Chen, W., and Ahmed, F. (October 11, 2021). Range-Constrained Generative Adversarial Network: Design Synthesis Under Constraints Using Conditional Generative Adversarial Networks. ASME. J. Mech. Des. February 2022; 144(2): 021708. https://doi.org/10.1115/1.4052442

@article{10.1115/1.4052442,

author = {Amin Heyrani Nobari and Wei Chen and Faez Ahmed},

title = {Range-Constrained Generative Adversarial Network: Design Synthesis Under Constraints Using Conditional Generative Adversarial Networks},

journal = {Journal of Mechanical Design},

volume = {144},

number = {2},

year = {2021},

month = {10},

issn = {1050-0472},

doi = {10.1115/1.4052442},

url = {https://doi.org/10.1115/1.4052442},

note = {021708},

}

The authors acknowledge the MIT SuperCloud and Lincoln Laboratory Supercomputing Center for providing HPC resources that have contributed to the research results reported within this paper.