Car Drag Prediction

Surrogate Modeling of Car Drag Coefficient with Depth and Normal Renderings

1MIT 2Toyota Research Institute (Boston) 3Toyota Research Institute (Los Altos)

Surrogate Modeling of Car Drag Coefficient with Depth and Normal Renderings

1MIT 2Toyota Research Institute (Boston) 3Toyota Research Institute (Los Altos)

The dataset, code, and published paper of this project are available here:

Generative AI models have made significant progress in automating the creation of 3D shapes, which has the potential to transform car design. In engineering design and optimization,evaluating engineering metrics is crucial. To make generative models performance-aware and enable them to create high-performing designs, surrogate modeling of these metrics is necessary. However, the currently used representations of three-dimensional (3D) shapes either require extensive computational resources to learn or suffer from significant information loss, which impairs their effectiveness in surrogate modeling. To address this issue, we propose a new two-dimensional (2D) representation of 3D shapes. We develop a surrogate drag model based on this representation to verify its effectiveness in predicting 3D car drag. We construct a diverse dataset of 9,070 high-quality 3D car meshes labeled by drag coefficients computed from computational fluid dynamics (CFD) simulations to train ou rmodel. Our experiments demonstrate that our model can accurately and efficiently evaluate drag coefficients with an R2 value above 0.84 for various car categories. Moreover, the proposed representation method can begeneralized to many other product categories beyond cars. Our model is implemented using deep neural networks, making it compatible with recent AI image generation tools (such as Stable Diffusion) and a significant step towards the automatic generation of drag-optimized car designs.

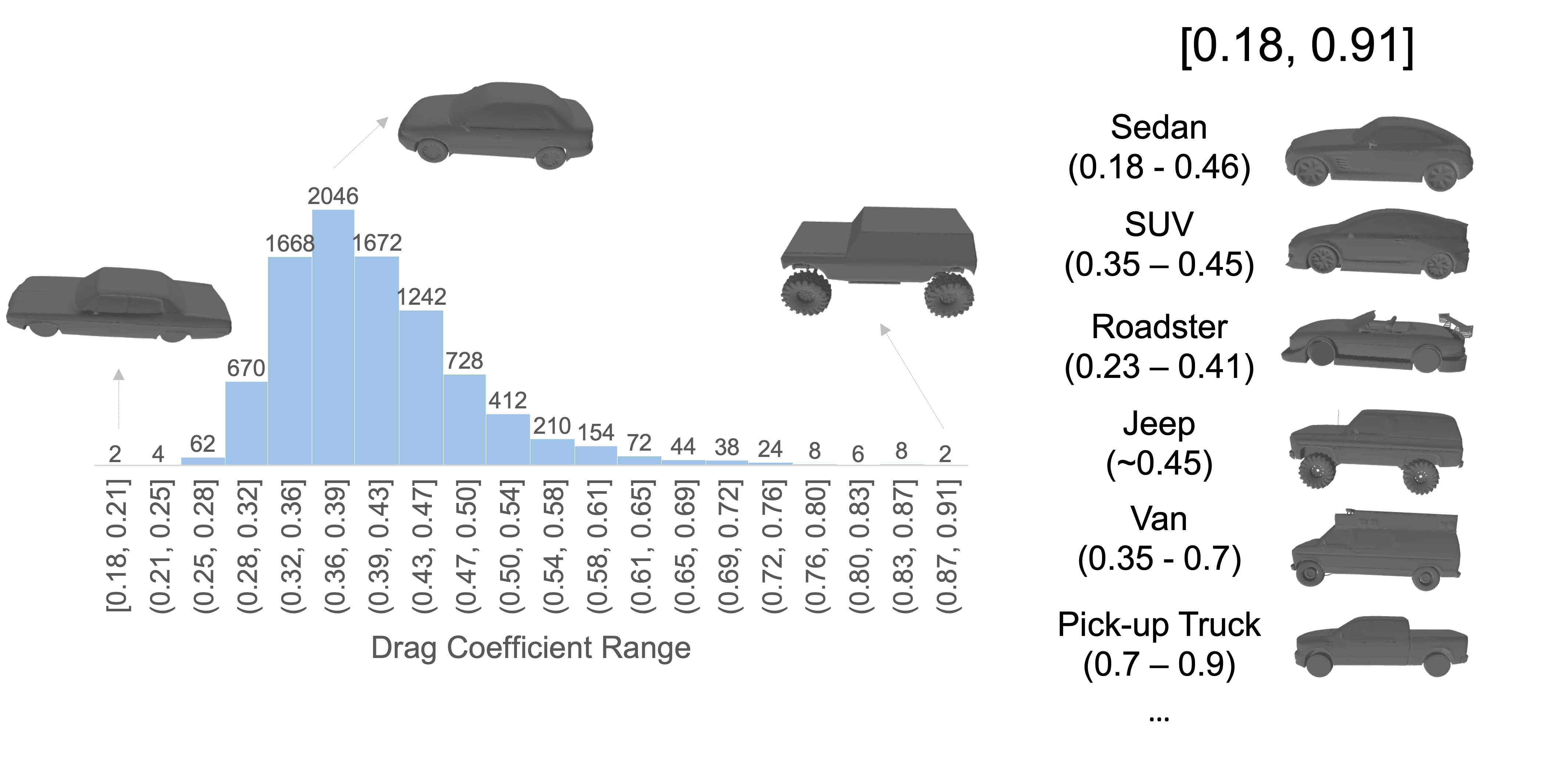

The 3D car meshes used in this project are initially from the ShapeNet V1 dataset, which contains 7,497 3D car meshes with varying surface qualities. A substantial percentage of the original car meshes from ShapeNet are not watertight, with unsealed areas or holes on the surfaces. We need high-surface-quality car meshes in order to achieve reliable CFD simulation results when computing car drag coefficients. Therefore, we manually checked the surface quality of each car mesh from ShapeNet and selected a subset of 2,474 high-quality car meshes. Since most of the selected meshes are still imperfect, we further repaired them using the repair module in Autodesk Netfabb Premium. It should be noted that this dataset covers a variety of car configurations, such as pick-up trucks, sedans, sport utility vehicles, wagons, and combat vehicles. The diversity helps our learned surrogate models generalize across all cars. In addition, we employed two different approaches to augment the original dataset. First, we resized the width of each car using a random coefficient between 0.83 (i.e., 1/1.2) to 1.2. The resizing augmentation created another 2,474 cars with slightly different widths and drag coefficients from the original cars, resulting in a dataset of 4,948 different cars in total. Second, since the car meshes are not perfectly bilaterally symmetric but their drag coefficients are invariant to bilateral flipping, we employed a flipping augmentation to create another 4,948 cars, which have exactly the same drag coefficients as the cars without this augmentation.

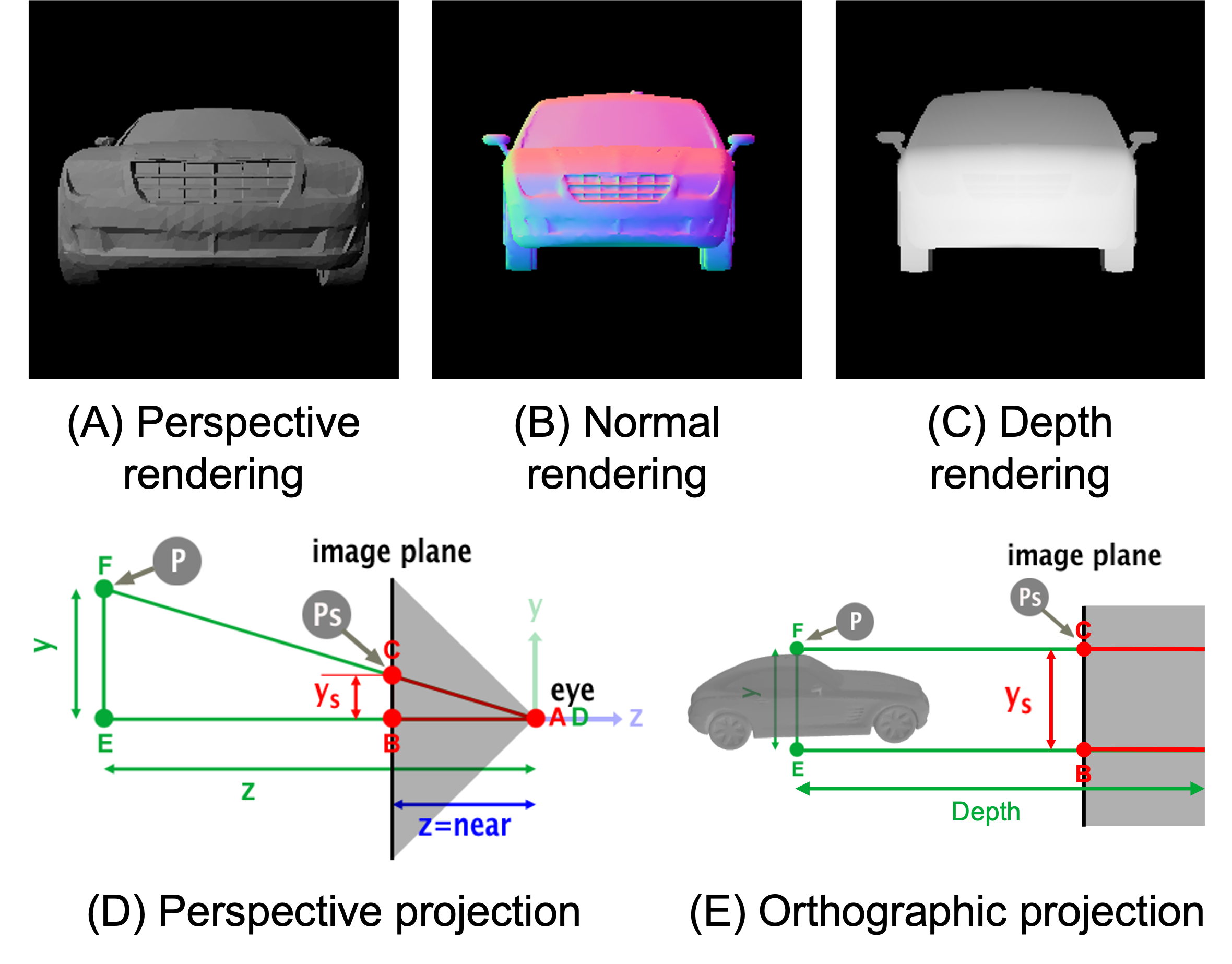

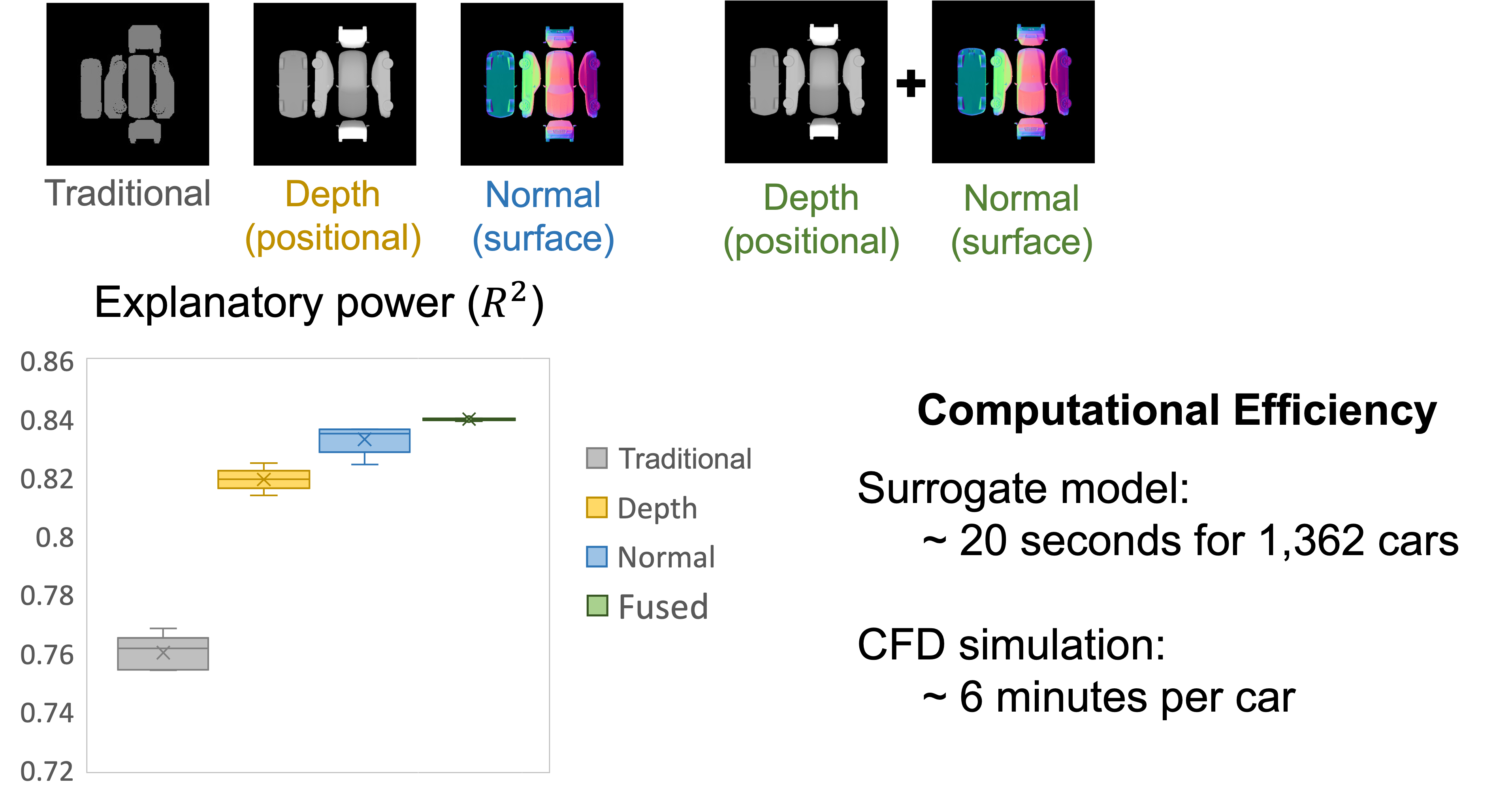

In prior work, voxels, point clouds, and meshes are commonly used to represent 3D shapes. They each require different deep neural networks to learn and rely on intensive computational resources to capture fine-grained, high-resolution 3D features. For car body design, we only focus on the surface of the car and ignore any interior architecture. In this paper, we propose a new 2D representation of 3D shapes that consists of two types of renderings, namely the normal rendering and the depth rendering, generated through orthographic projection. Specifically, the pixel values of the normal rendering encode the unit normal vector at each point of the mesh, with the x, y, and z coordinates mapped to the red, green, and blue color channels, respectively. The pixel values of the depth rendering encode the depth of each point, i.e., the distance between the camera and the point.

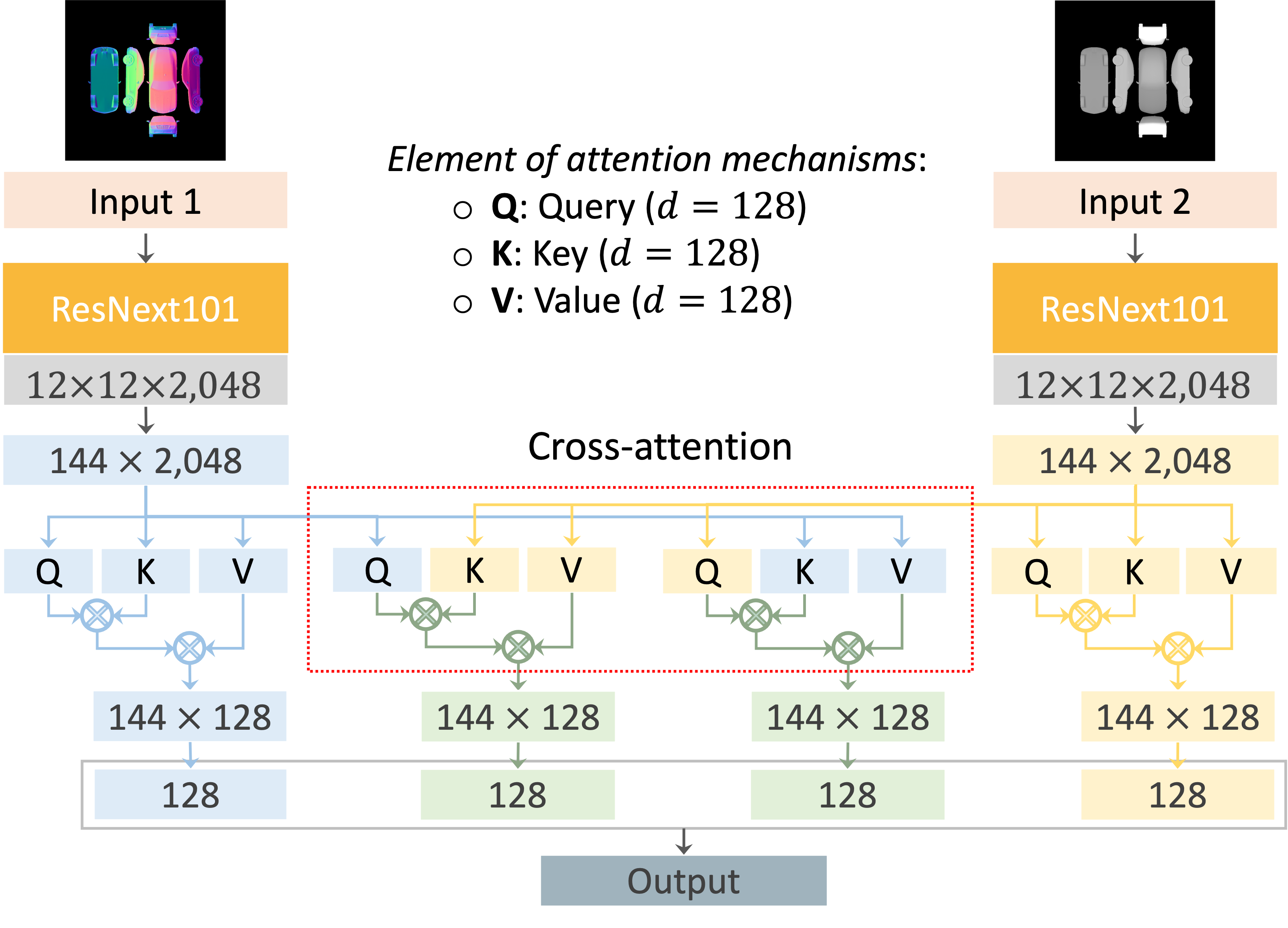

We fuse two attn-ResNeXt models respectively pre-trained on the normal and depth renderings using a symmetric cross-attention mechanism. The cross-attention mechanism is expected to capture the interactions between the regions respectively from the normal and depth renderings. Then, the outputs from the self-attention and cross-attention mechanisms are flattened and projected to a lower dimension (128) through linear layers, which are then concatenated as the final embedding to predict the car drag coefficient.

The proposed representation is also more informative than the perspective renderings as input for car drag coefficient evaluation. The models using the normal renderings and depth renderings achieve significantly higher R^2 values and lower MSE values than that using the 2D perspective renderings. That is, the normal and depth information conveyed by the proposed representation enables the model to capture more informative features for drag coefficient prediction. Additionally, the normal renderings are more informative than the depth renderings for this task when used separately.

Moreover, the proposed fused model outperforms depth and normal models, while also reducing the variance in prediction performance.

Song, B, Yuan, C, Permenter F, Arechiga, N, & Ahmed, F. Surrogate Modeling of Car Drag Coefficient with Depth and Normal Renderings. IDETC2023-117400

@article{song2023surrogate,

title={Surrogate Modeling of Car Drag Coefficient with Depth and Normal Renderings},

author={song,

Binyang,

and Yuan,

Chenyang and Permenter,

Frank and Arechiga,

Nikos and Ahmed,

Faez},

booktitle={International Design Engineering Technical Conferences and Computers and Information in Engineering Conference},

year={2023},

organization={American Society of Mechanical Engineers}}

This research was supported in part by the Toyota Research Institute. Additionally, we thank Mr. Hanqi Su for helping us select high-quality car meshes from ShapeNet.